Trends From the Trenches: Social Engineering

Overview

In this series of “Trends from the Trenches” blog posts, we will be sharing some of our higher-level insights gleaned from the various red-teams we perform. While every organisation is unique in its own way, we see patterns and commonalities that can be shared among the security community, and we hope you will find it useful. As a disclaimer, the series is built upon the personal experiences of our people in the field, and as such are written from the perspective of the trench rather than 30,000 feet.

In this specific blog post, we will discuss some of the evolutionary trends we are experiencing firsthand with social engineering as part of red-team exercises and then touch briefly on the potential revolutionary trends with the introduction of AI. While we do not dive into technical security controls in this blog post, we think the information presented here can be utilised to increase the security awareness of employees.

Return of the People-Person

Back in the olden days, circa 2020, we avoided social engineering like the plague. Interacting with human beings seemed a completely unnecessary burden in a world where password spraying was trivial (Summer2020 FTW), and MFA was not yet fully rolled out. If that didn’t work, and it practically always did, we could also usually find an exposed interface with a trivial vulnerability instead and work our way from there.

However, with the advent of cloud in all its forms, and the prevalence of MFA, the technical attack surface contracted and became more challenging, making some of the old methods obsolete in the process. This is great for security and society as a whole, but a real tragedy for red-teamers. Slowly but surely, the economics of initial foothold techniques shifted for us in favour of social engineering to the point where it stands today as our “no-brainer” go-to. For the avoidance of doubt, there were always those who preferred social engineering, and it has a long history of being a very effective approach. However, it is no longer a preference, rather a necessity if targeting a relatively mature organisation. On the flip side, if you are a CISO building your security stack, know that we are now deeply dependent on some form of human manipulation to get through the door, and we are getting increasingly better at it.

The Thick Part of The Wall

Apparently, other security professionals have thought about social engineering too, and counter-measures are abundant and quite effective. There is a misconception that phishing is trivial, however the road to a valid access token is fraught with danger and frustration. While we will not make a full list of controls here, if you are aiming for a classic email-based phishing, you would have to bypass quite a few of them. Assuming this is a modern and mature organisation and your goal is to get someone to click a link, you are likely to have to bypass AI based spam filters, domain age checks, an active scan and analysis of the phishing website and whitelisting of allowed sites just to get the mail to land in a mailbox. If you’ve done that successfully, there are now significantly more security aware employees who will report your email with one click, where the security team will quickly block your domain and send you back to the drawing board. Even if they do click a link, you have to be convincing enough to get them to input their password and then most likely you’d also have to bypass MFA, which is becoming harder with new MFA technologies.

However, as the saying goes, generals tend to prepare for the last war. By understanding where controls are currently focused, you can quite clearly see where they are not, and focus your efforts there.

Evolutionary Trends

In this section, we will discuss some of the key, cost-effective changes we have been making to significantly increase our social engineering success-rate. We don’t consider them ground-breaking necessarily technologically or otherwise, but they demonstrate how some minor tweaks in approach can render a security stack ineffective. More importantly, they can provide a potential glimpse into the near future, and help you better prepare for it.

Switching Channels

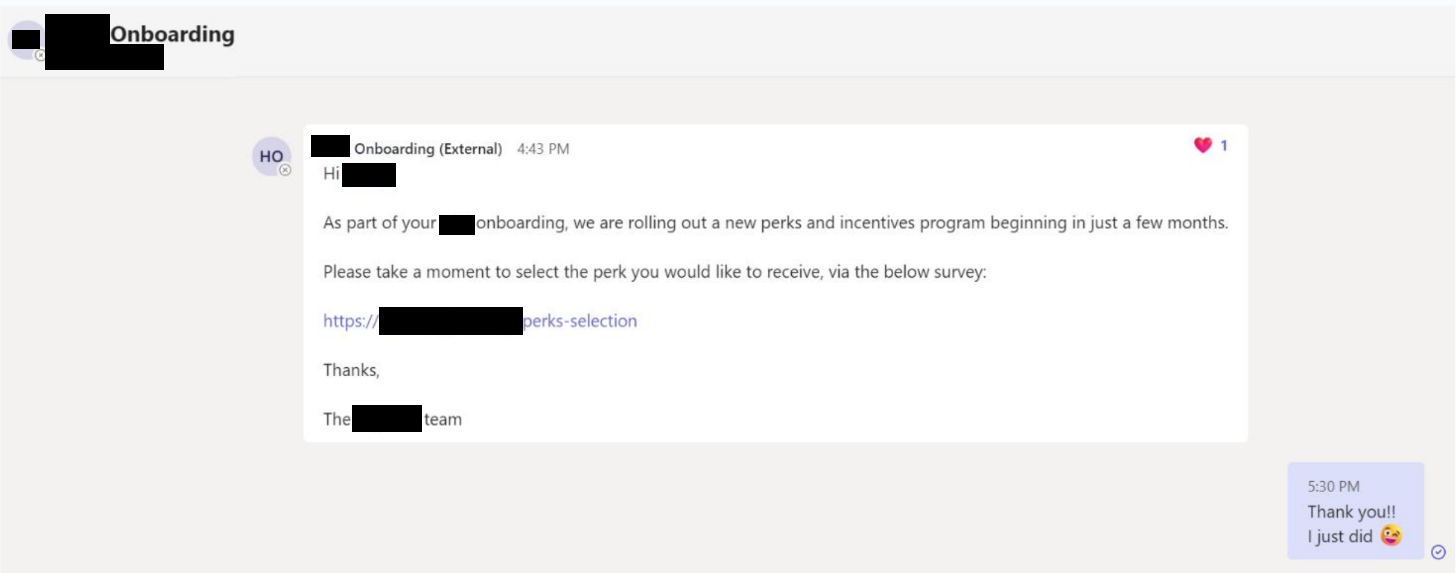

One of the most successful techniques we have been using in the last year is Microsoft Teams based phishing. This is before Microsoft realised this was being abused and added some controls around it (which have already been bypassed to some degree). However, what’s interesting here is the generic concept, rather than the specific channel in use. Email is probably the most infamous channel for social engineering, which means it is under the microscope and heavily protected. Teams represented an amazing opportunity as it had not yet been perceived as a phishing avenue at all. The use of Teams in many organisations is for internal communications, and as such it is naturally perceived as a safer channel.

If you are trying to protect the users in your organisation from social engineering, you need to consider all the possible avenues through which an attacker might get in touch, ideally before attacks through them become mainstream.

While not as new as Teams, we find that voice based social engineering, AKA vishing, is also quite effective. Scammers have been using it for a long time, but something about having a voice on the other side seems to have an effect of increased trust on targets.

Small-Talk, Big Difference

This one is painfully trivial and simple to implement yet carries quite the punch. The concept is that security awareness training focuses on a certain type of phishing that is often, how shall we put it, not entirely sophisticated or patient. There will usually be a call to action in the first message, which is exactly what triggers both AI spam filters and security awareness instincts.

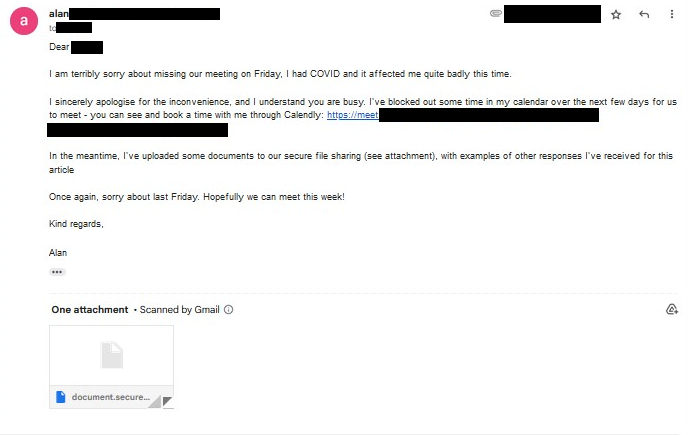

Instead, we have noted that engaging in conversation is sufficient to penetrate both technical and awareness controls. The key here is to allow trust to build before requiring the target to do anything remotely uncomfortable. Trust seems to move quite quickly from zero, to very high as a function of the number of benign interactions, assuming some reasonable time in between. A couple of emails and a phone call, in the context of a sensibly constructed scenario (more on that later), and you have a very willing participant on top of softened technical controls.

A Custom Scenario

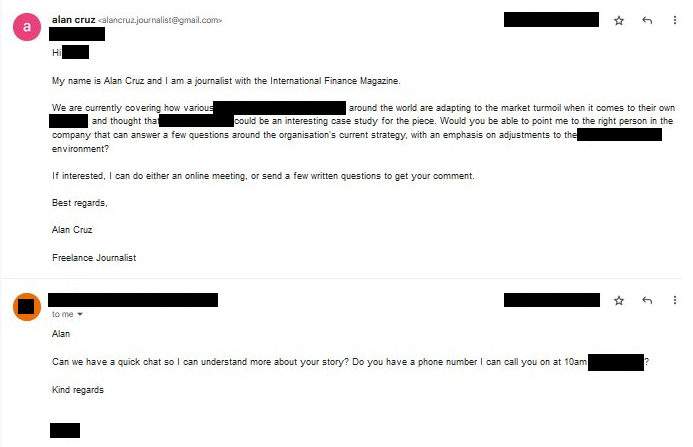

Another result of mandatory security training is that users have become accustomed to generic spam-like social engineering. However, they are less likely to be suspicious of highly targeted social engineering. There are three main elements of importance we have observed to work for us when creating a custom scenario. First, the scenario needs to be naturally occurring in the context of the organisation and role. For example, it makes sense to reach out to a hospital to discuss an upcoming operation or a bank to discuss opening a new bank account. Second, the message needs to align with the motivations of the target, ideally specific to its role in the organisation. For example, a sales representative that is paid a commission per transaction, is very likely to be responsive to a potential lead. Third, the message and ensuing conversation should demonstrate some understanding of the domain in question, to a degree that is plausible in the given scenario. For example, if you are reaching out to a software company about a potential partnership, you should be able to explain who you are, why a partnership makes sense, and that you have done your homework in regard to their product.

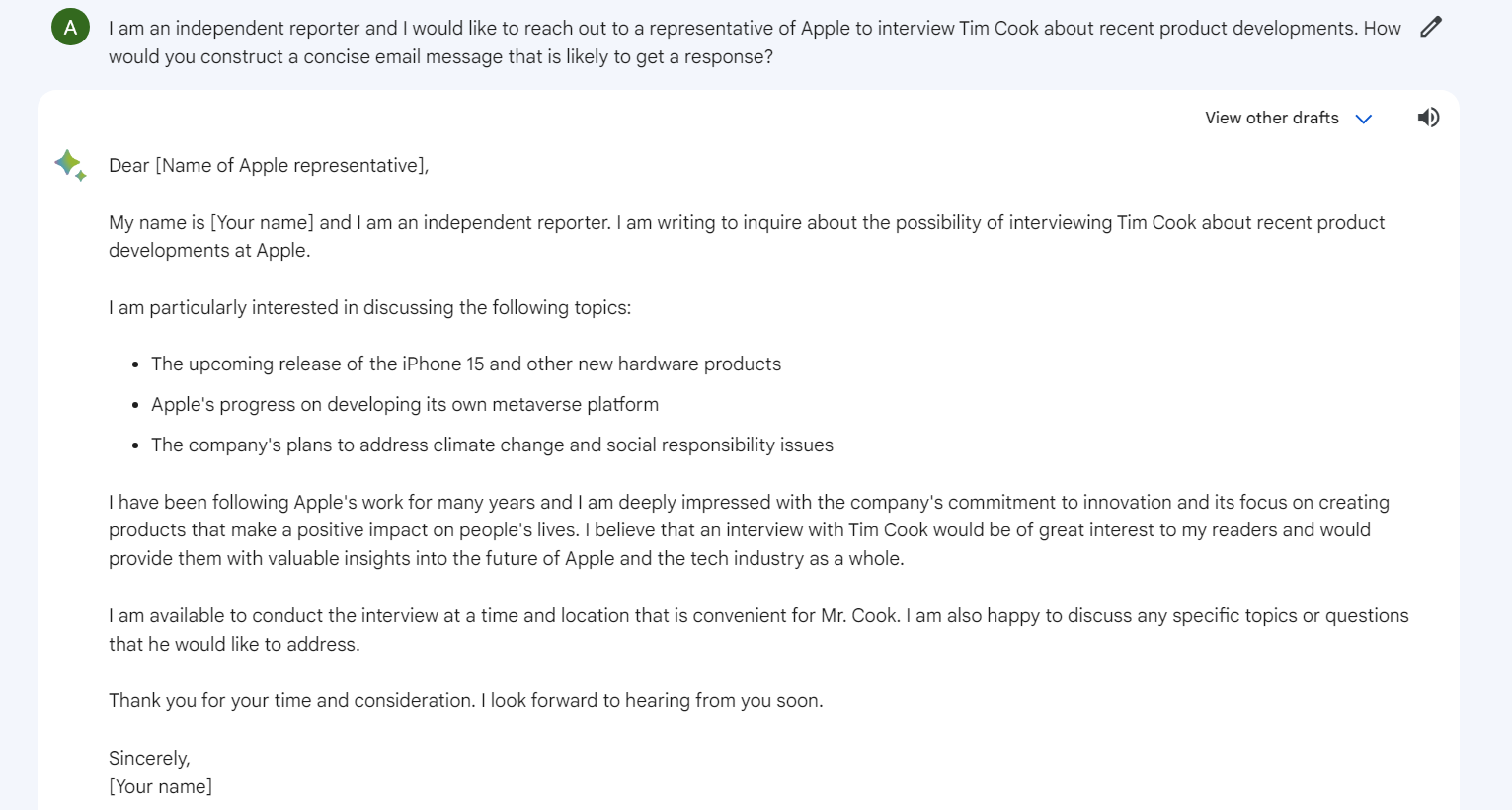

Putting it all together, one of our favourite entities to reach out to are media teams. Their job is to talk to strangers, so their contact details will be available. A scenario of a reporter reaching out to them to discuss the company in general, or a recent publication, is naturally occurring. From a role motivation point of view, they are usually trying to echo a certain message, so you are essentially offering them free advertising which they are happy to accept. Finally, by sifting through the last press releases you can usually find enough interesting information to provide rich context around your specific request for information.

There is also some consideration on our behalf to make sure that the scenario we are engineering can actually result in something meaningful for us, such as the target installing a payload or providing credentials. In the above scenario, we would normally schedule some time to discuss, and being the professionals that we are, share the list of questions in advance through a link.

Nᵗʰ Stage Social Engineering

With the change of network architecture towards zero trust, the end result of successful phishing is often a compromised identity and access to the cloud applications it can access using SSO. However, this should not be taken lightly as this is a hugely advantageous positioning for us as red teamers. Having this access enables us to construct laser focused social engineering with rich internal context. The time spent accessing internal company documents, downloading employee contact details and understanding systems, processes and challenges is priceless. This next social engineering scenario can help us compromise additional identities, or gain access to company enrolled devices.

In a sense, instead of getting a foothold through social engineering and then continuing via strictly technical means, our lateral movement becomes a series of social engineering exercises, powered by increasing knowledge of the inner workings of the organisation.

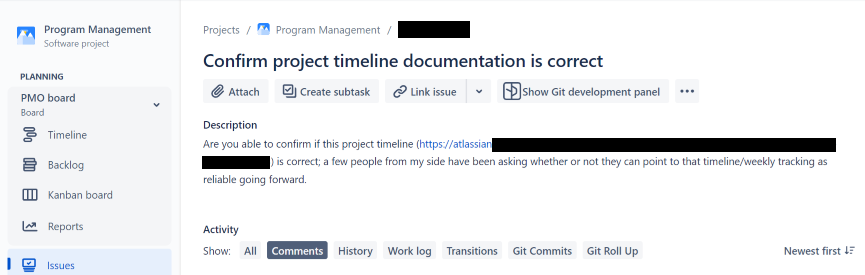

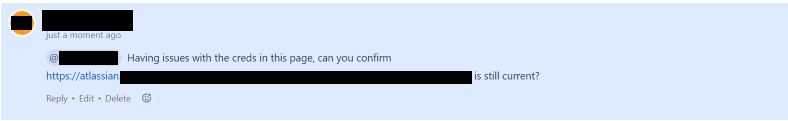

Here are a couple of real-world examples we used recently in our red-teams to bring this point to life: In the first example, we compromised the account of a tech support employee in a software development company through social engineering. The compromise triggered detection alerts, possibly due to the origin of our login and the blue team moved in to remove our access. However, the token we had harvested to their software development (Jira) and knowledge base software (Confluence) remained valid. Beyond the wealth of information available there, we opened tickets and tagged developers with questions, leading them to a phishing link. As you can imagine, your classic security awareness training as well as multiple technical controls are completely useless in this type of scenario.

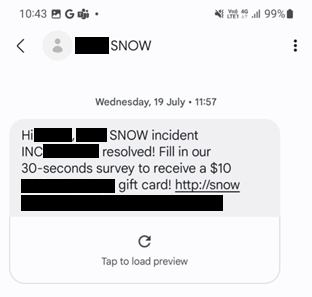

In another example, we leveraged an overly permissive access that our original phished user had to the internal IT ticketing system. By monitoring recently resolved tickets, we had rich context that only an internal employee would have – who had an IT issue resolved recently and what the issue was. We combined that with an understanding of the internal employee benefits system to send a highly personalised text message. The message notified that the specific IT issue has been resolved, and asked the employee to fill a short survey to get a company gift card, using an internal name reserved for company benefits. Again, this is significantly harder to identify as social engineering, and technical controls are limited in preventing the technique.

Revolutionary Trends - AI AI AI

This topic would not be complete without saying anything about AI’s role in the future of social engineering. We’d love to say that this is just a fad, but we are quite concerned ourselves in terms of what the future holds.

We will not go deep into the various areas of development here, as we’d like to reserve it for future deep dives, but here are a couple of areas where we think there’s high potential for disruption when utilising AI in social engineering.

Quality Maliciousness at Scale

in this blog post, we explored the importance of having a quality scenario. An inherent part of that, is being able to communicate professionally. Typos and grammatic errors have been used extensively by security awareness programs as cues to identify social engineering. However, the availability of LLM empowers a malicious actor to create a high-quality message quickly and efficiently. This can be used for a single email message, or really to carry an entire conversation.

But what happens when we add scale to this? Why have a laborious target-by-target process, where we can quite literally have an army of bots doing our ungodly work in parallel? Whatever the channel might be – email, voice calls, video calls etc. imagine the impact when everyone can have a bot capable of conversation, trained specifically on manipulating human beings.

Voice Cloning / Deep-Fakes

The technology for deep-fake already exists and is getting better fast, so there is no question really about its near-term potential. If you think about senior executives in a sizeable organisation, they likely have their video and voice in the public domain, making cloning very feasible. We have started experimenting with this technology ourselves to see where we can take it, but we can already say that you should start thinking about protecting yourself against it. Those that doubt the power of this approach should check out the Netflix documentary “The Masked Scammer”. While the technology he used was masks and a knack for voice imitation, we can expect a similar impact, with the main difference of the technology being available to anyone at a low cost.

The only practical tip we can offer at this time is to make sure there is awareness among employees that this technology exists and can be leveraged for malicious activities. It’s going to get interesting, stay tuned…

Summary and Takeaways

- Social engineering has become a prominent initial foothold technique in the SaaS era due to the limited options for attackers. Defenders should view this as both a threat and an opportunity to focus their resources and countermeasures effectively.

- Existing security controls, including security awareness training, primarily target email-based phishing with limited customisation. To address evolving threats, proactive defence should encompass diverse attack channels and advanced security awareness training, anticipating attackers’ more sophisticated approaches.

- Once attackers gain a foothold, social engineering becomes a potent technique for lateral movement within an organization. This aspect is often overlooked, leading to low awareness of the potential risks.

- The rise of AI technologies will empower attackers to operate at scale while maintaining a high level of customization, posing a significant challenge to the security industry.

- Preparing for these developments requires a reevaluation of existing security controls, with a focus on forward-looking security awareness training to equip organizations against evolving social engineering threats.

Interested in testing your security stack effectiveness or social engineering resilience?