Cylance, I Kill You!

Update: 07/Sep/2019

We had the honour to present our findings in today’s BSides Sydney (Slides).

We took this opportunity to make some of the yet unpublished materials public.

We can now reveal that the undisclosed game we’ve used is “Rocket League”, but many others work just as well (we’ve tried Fortnite, for example).

Some more goodies include the special sauce - the list of strings that appears in Rocket League’s executable and are part of Cylance’s Model. Just append these into any malicious executable to make Cylance believe it’s benign.

As of today, the bypass is still exploitable on the home edition (Cylance SmartAV). The vendor has told us the enterprise edition (CylancePROTECT) has been fixed, but we were unable to verify that. If you have access to the enterprise edition and can confirm the fix, please let us know in the comments box at the bottom of the page.

TL;DR

AI applications in security are clear and potentially useful, however AI based products offer a new and unique attack surface. Namely, if you could truly understand how a certain model works, and the type of features it uses to reach a decision, you would have the potential to fool it consistently, creating a universal bypass.

By carefully analyzing the engine and model of Cylance’s AI based antivirus product, we identify a peculiar bias towards a specific game. Combining an analysis of the feature extraction process, its heavy reliance on strings, and its strong bias for this specific game, we are capable of crafting a simple and rather amusing bypass. Namely, by appending a selected list of strings to a malicious file, we are capable of changing its score significantly, avoiding detection. This method proved successful for 100% of the top 10 Malware for May 2019, and close to 90% for a larger sample of 384 malware.

Read the full post to understand the research process itself, the inner workings of an advanced AI based EPP and how we found the universal bypass.

Another Brave New World

Once every few years, the cyber security world is blessed with the birth of a baby silver bullet. It starts small, with a few enthusiastic parent companies hailing the newborn prince as our savior, telling the stories of its invincible power. A few years and millions of marketing dollars later, it grows and becomes an absolute powerhouse. The mere whisper of its name adds trust to your product, gets you appearances in the most influential conferences, and helps seal that much-needed funding round.

With time, the silver appears to be just coating that soon starts wearing off and some brave souls start seeing it for what it is — another tool, hopefully an effective one, in the never-ending process which is cyber security.

Such is the story of many “silver bullets” we have seen over the years, and inevitably such will be the story of AI and machine learning.

AI has been touted as the silver bullet to end them all with significant marketing force — after all, if we can teach a machine to think like a human analyst, only with the addition of big data and almost infinite processing power, then surely, we will be able to create an invincible mechanism. A brain so powerful that it could not be fooled by any other mechanism.

Right…

In this post we will show how we can reverse the model of an AI based EPP product, and find a bias enabling a universal bypass. We chose Cylance for practical reasons, namely, it is publicly available and widely regarded as a leading vendor in the field.

However, we believe that the process presented in this post can be translated to other pure AI products as well.

AI for Millennials

AI is an extremely important and fascinating technological field with profound implications for human society. However, we are not AI experts, heck, we’re not even power users. With that disclaimer in mind, let’s try to understand how AI works conceptually, so we can hypothesise later on how we may fool it.

In the context of endpoint protection, we are faced with a classification problem. Given a file, we need to classify it as either malicious or benign.

There are many approaches to this, but generally speaking, you are trying to train an artificial brain to identify certain properties of the subject and then apply some form of mathematical model to calculate whether what you are looking at is a certain object.

Let’s assume for example, that we are interested in having a machine classify objects as either birds or human beings.

A specific AI model may look at certain attributes of the object like weight, height, whether it has a beak, wings etc. to make a decision. By exposing a model to numerous samples of birds and human beings, the differences will start showing. For example, most human beings that we are aware of do not have beaks or wings. Thus, if something possesses either of those attributes, there’s a high likelihood that it is a bird. This is of course an oversimplification of a truly beautiful field of knowledge, but it will suffice for now.

By applying the same approach to classification of files as malicious or benign, we get clear and substantial benefits:

- Prediction by design — a well-trained model should have the ability to identify a malicious file it has never seen and has no prior knowledge of.

- Infrequent updates — a model is trained once and can last years without updates.

- Lower resource consumption — AI vendors claim that the nature of their technology leads to lower CPU, memory and disk consumption.

Overall, AI should help you detect more threats, earlier, while incurring a lower management and computing resource overhead for your organization. Can I have two please?

Data Nerd vs. Hacker Mindset

Hackers will always try to find the most economical way to get their way.

Going back to the birds and human beings classification problem discussed above — if we understand that the AI model relies heavily on the existence of a beak and wings to make a decision, we can go buy ourselves a goofy bird costume.

If, on the flip side, the model looks at brain weight to body weight ratio, we’ll need a completely different trick.

Whatever the important attributes may be, if we identify them, we have a good chance of consistently defeating the artificial brain — that is, make malicious files look benign through some sort of treatment.

A Chatty Log

All right, time to roll our sleeves and start our research, exciting!

As a first stage we would like to create a process to determine the classification of a given file, as determined by the Cylance engine. This would allow us to understand at a later stage if we were capable of fooling it.

By activating verbose logging we can capture high-resolution information that Cylance is kind enough to provide. We always say that a verbose software is like a drunk stranger in a bar, it will tell you about all of his problems. Here is an excerpt from the log file, indicating a detection of a malicious file (Mimikatz with a single modified byte):

AnalyzeFile hashed C:\Users\Administrator\Desktop\mimikatz_with_slight_modification.exe 143020851E35E3234DBCC879759322E8AD4D6D3E89EAE1F662BF8EA9B9898D05

LocalAnalyzeItem LocalInfinity.ComputeScore begin

LocalAnalyzeItem, C:\Users\Administrator\Desktop\mimikatz_with_slight_modification.exe score -852 detector execution_control

Detected as 'Unsafe'! path:'C:\Users\Administrator\Desktop\mimikatz_with_slight_modification.exe' hash:143020851E35E3234DBCC879759322E8AD4D6D3E89EAE1F662BF8EA9B9898D05 We can see that the engine has a scoring mechanism and our modified Mimikatz was scored -852. By empirically testing various good and bad files we later determined that the score can range from -1000 for the most malicious files, and +1000 for the most benign of files.

Good job Cylance, you identified a mutated Mimikatz, already putting you well beyond 50% of the endpoint protection products out there (don’t believe us? Compare original Mimikatz with one-byte modified Mimikatz).

Hold tight though, we are just getting started.

Diving In

We now had a clear objective — understand Cylance’s scoring mechanism, so we can later bypass it.

To do so, we started by reverse engineering the code, which was of course obfuscated, but constructed in a clear way, making it possible to follow.

We also found some publicly available information of the inner workings of the product from patent submissions and public talks. One of these resources describes the engine as being an ‘Ensemble’ (which is a group of models) and we did find a class named ‘EnsembleReader’. It is used to load the ensemble from a resource embedded in one of the DLL files. The extracted model is encrypted, however, the algorithm and quite original key are rather clear:

public class EnsembleReader : ILogAccess, IEnsembleHeader, IDisposable

{

protected const int RandomHeaderSize = 3072;

protected const string KeyAndIv = "I am decrypting Cylance's intellectual property.";

protected Stream _stream;

protected byte[] _activeKey;

protected byte[] _activeIV;

protected bool _loadSectionData;By following the trail of how the model information is used, we reached the ‘SampleScore2PE’ assembly. Its name suggests it scores PE files, which naturally piqued our interest. We looked at the exposed interfaces of that assembly and found the following gem:

public ISampleScore Create(string inputModelsPath)

{

return (ISampleScore) new SampleScoring2PE(inputModelsPath);

}Before discovering this interface we were already planning on using the old school way of revealing the secrets of the model file — use the key to decrypt the model and painstakingly analyse it for days or weeks. Instead, we realized that we can just build our own tiny .NET executable and link against these assemblies much like Cylance.Engine.Core does. We then called the Create() function with a path to the model file we’ve extracted earlier (still encrypted, BTW), and we got an object that exposes a ComputeScore() function.

Can this get any better (it does actually)?

SampleScoreFactory2PE factory = new SampleScoreFactory2PE();

SampleScoring2PE scorer = factory.Create("test_model.bin") as SampleScoring2PE;

Stream test_file = File.Open("mimikatz_with_slight_modification.exe", FileMode.Open);

Dictionary<string, object> extraData;

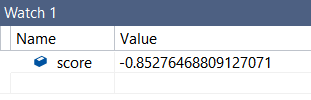

double score = scorer.ComputeScore(test_file, out extraData);By executing the code above, we received a score of -0.852764 as output for our modified Mimikatz file, which looks awfully similar to the -852 we noticed in the log files earlier.

This may not seem like much, but this is actually a very solid basis for our research. We now have an easy process to test PE files against Cylance’s scoring engine, and more importantly, we have a starting point for dynamically debugging the scoring process.

Features Galore

We used a combination of static and dynamic analysis techniques to study the scoring process. The beginning of the classification journey starts with examining and measuring different features of the target object. Going back to our bird vs. humans example, this is the part where you would take measurements, check the existence of wings and beak, and quantify other aspects of the subject being classified.

Cylance uses a combination of code and data from the model itself to produce the feature vector.

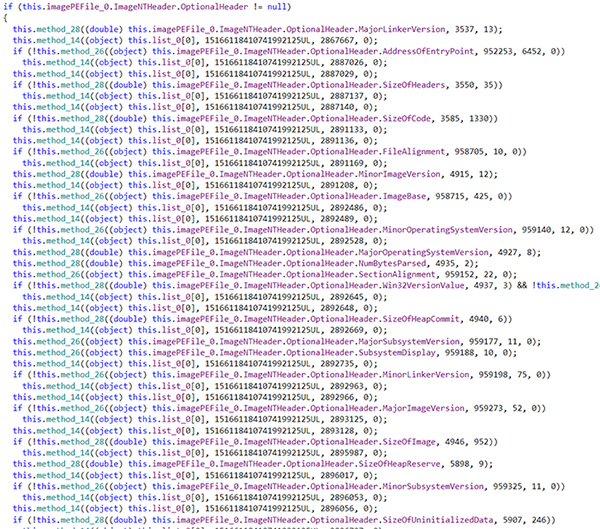

The PE file is first extensively parsed to produce a vast amount of different properties of the file. Some are simple, such as the number and names of sections, while others are more complex observations that require a bit of processing to produce. For example, testing if the PE has a correct checksum field, counting the amount of instructions in the entrypoint and the number of imports related to process injection.

The next major step in the classification process is to turn these extracted properties into a feature vector (AKA: feature extraction). As there are thousands of features (7,000 to be precise), we did not bother enumerating all of them. Instead, we focused on the general process that Cylance uses to transform plain file properties into a feature vector.

There are thousands of lines of code handling this transformation, but the overall logic is the same: the engine takes an input property and compares it against a known value or a list of values. One comparison for examples compares the TimeDateStamp field from the File Header of the PE file against a list of 3523 different ranges of timestamps. Depending on what range the timestamp falls into, the engine executes a certain action.

That action is really just a sequence of instructions to increment or decrement values of the feature vector. Each action can affect one or more values, and the list of instructions is stored in the model’s data.

Let’s analyse one example:

if (!this.method_28(this.imagePEFile_0.ImageNTHeader.FileHeader.TimeDateStamp, 2, 3523))

this.method_14(2866581, 0);In this snippet, we first call method_28 which will try to search for the extracted TimeDateStamp property in 3523 different time-date ranges. Each range has a corresponding action attached to it. If the property is not found in any of the time-date ranges, method_14 will be called which will trigger an action, designated for instances where this property is not found to be in any of the “known” ranges.

At the end of this very long process, after executing countless actions, we end up with a vector containing 7000 feature values. This feature vector is the extract of the PE file, and it alone will determine the score and classification of the file.

The next phase is to apply the model to the extracted feature vector. The process starts with normalization and additional post-processing of the feature vector, transforming it into a format that is usable mathematically (we won’t go into full details here).

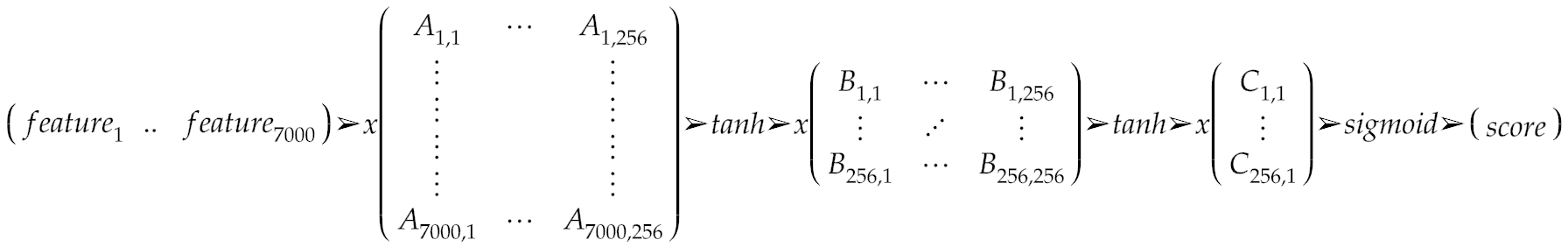

Then, the engine uses 3 different matrices that are part of the model’s data to transform the feature vector into a single value, which is the final score of the file. As discussed, we are not machine learning experts, but we have seen academic papers suggesting it is possible to approximate a neural network using matrix multiplication, and it all seems to add up: in order to improve performance, Cylance likely created an approximation of their model in the form of several matrix multiplications. After each multiplication, the engine applies an activation function (tanh / sigmoid). This seems to be a very common technique used in neural networks to introduce non-linearity into the model in order to allow computation of non-trivial problems.

How were these matrices calculated in the first place though?

We can’t be sure, but by combining what we’ve learned from analysing the product, open source information, and the little we know of how AI is used in other industries, we can draw a plausible explanation of the process that was used to create these matrices.

Cylance probably started by collecting a huge repository of malicious and benign files. They then generated a very large feature vector for each, much larger than the final 7000 features.

Using statistical models, they reduced the list of features down to the most meaningful 7000 (AKA: feature selection).

Then, they created sets of deep neural networks with varying configurations such as the number of nodes, layers, activation functions etc, and trained the models on the repository of files. Using an iterative Darwinistic process, they continued removing and exploring configurations, until those that exhibited the best properties remained (e.g. high accuracy, low false positive rate).

The next step would have been to approximate the model using matrix multiplication, the essence of which are the matrices we see in the model data.

Going back to the code, we can see that the first matrix multiplication uses the 7000 feature vector as input and outputs 256 2nd order features. These are then fed into another layer of neural network, represented as another matrix, yielding an additional set of 256 3rd order features. The final layer of the neural network is approximated using the last matrix multiplication which results in a single number, representing the final score of the file.

These matrices and associated feature extraction processes are stored in the model file and shipped with the product we tested against. This is the “model data” we were referring to earlier, and it represents the essence of the Cylance engine itself.

Paranoid Centroid

The end product of the lengthy process of file scoring is a number in the range of -1 to 1, indicating how malicious the file is. As always, real life challenges make things more complicated: after scoring is completed using the described method, another mechanism comes into play with an override power over the earlier model.

We can only speculate as to why this mechanism was introduced, but we believe that Cylance’s team encountered some false positives and false negatives in the main model.

They could have probably adapted or improved their model but maybe they had time pressure, so the R&D team had to come up with something quick that would make the problem go away.

Whatever the drama behind the scenes may have been, they introduced an additional mechanism that is designed to target specific families of executables with the power to override the decision made by the previous model. That mechanism is called “Centroids” and it is commonly used for clustering objects. Its use in Cylance’s case is similar to a list of exceptions: when the model classifies a file, we use the centroids to check whether it is white or black listed.

To accomplish that, the engine uses a different feature vector, which uses a modified set of feature values. It normalises the values of those features by centering each around zero, with values ranging between -3 and +3, and then calculates the Euclidean distance between the resulting vector and some known, pre-calculated ones. Effectively, it tries to find if the executable is very similar to ones that were added to the model’s white/black list.

If the executable is exactly the same, the feature vectors are equal and the distance is 0. If some features are different though, the distance could grow, and if grows beyond a given threshold, it is not considered in the same cluster as the white or black listed centroid.

Crossroads

With a fair bit of knowledge of how the model works in hand, we hypothesized as to how we can actually circumvent and confuse the engine.

Our first hypothesis was to try to make a malicious PE look like one of the files in the whitelist. That is, force the relevant features into the right distance from a white listed centroid. We quickly realized that this technique has little chance to work as this mechanism relies on thousands of features, some of which are extremely hard to modify.

Our second hypothesis was that perhaps we could find some bias in the model itself: a small set of features that have a significant effect on the outcome. If we could change the malicious binary so that these features resemble good files, maybe the model will be fooled.

We took another long look at the list of features and tried to estimate the work required to take a malicious file and modify it. It felt overwhelmingly difficult, as there were thousands upon thousands of features. That is, until we came across the following lines of code:

for (int index = 0; index < this.imagePEFile_0.Strings.Length; ++index)

{

if (!this.method_26(this.imagePEFile_0.Strings[index].S, 95088, 854069, 0))

this.method_14(this.list_0[0], 15166118410741992125UL, 2847678, 0);

}These lines traverse through the entire list of strings found in the file, calculate their hash (using Murmur64), and then searches for them in a large DB containing string hashes (all part of the model’s data). For each string that is found, an action is triggered, affecting the feature vector.

Lift-Off

The latest discovery led us to believe that there might be some inherent bias in the model itself: many features that were included in the feature set (and remember: that’s the only value that matters) are a direct result of what strings exist within the executable. We can now differentiate between two types of features: ones that are based on strings, and ones that are based on some other property of the executable.

The string features are easier to manipulate and adding them to an executable should not be too difficult. Other properties can be much harder to manipulate. For example, reducing the amount of sections is rather hard, and requires work which is very specific to the executable itself.

So strings are a good direction, but which are our beak and wings? What strings would normally make someone look at a file and say “yep, looks benign to me”.

We were able to decrypt and parse the model’s data and retrieve the list of hashes of strings and associated actions. However, we didn’t have the actual strings themselves. This is when we had one of those eureka moments, without the bathtub.

By re-examining the centroids mechanism, we could observe the families of executables that Cylance’s team whitelisted. Perhaps a whitelist entry has been created at some time, after which a full re-train of the model was performed to correctly classify that family? If so, perhaps the model will be more biased towards strings taken from these types of executables.

We looked at the list which included only a few dozen centroids. Each centroid definition carried a name for identification and one of them stood out as it was a name of an online game. A quick conversation with one of the kids verified that it’s a well-known one. At the very least, it was popular enough to cause headaches and trigger special treatment from Cylance.

We purchased the game, and extracted all the strings contained in the main executable using a simple “strings” command, resulting in approximately 5 MB of strings. We then tried to find out exactly how the strings are parsed in order to better understand how we can make the parser pick them up. That is when we had our second eureka/lazy moment: let’s try the most naive solution and slap the strings onto the end of the file. That wouldn’t possibly ever work, would it?

We used the Mimikatz version that received a score of -852 and executed the following command:

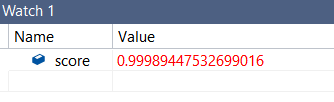

copy /b mimikatz.exe+strings.txt modified_mimikatz.exeWe then fed it back into the scoring mechanism and had our OMG² moment — Score is now 0.9998944… (= 999). This is almost a perfect score.

Did we just find a potential shortcut to kill the model? Is this the cheap bird costume we were after?

We went ahead and tested our solution with additional known malicious files and hacking tools and got consistent results — the score changed from a strong negative to a strong positive. We also confirmed that no other mechanisms were testing the behaviour of the files dynamically and blocking them, by executing these modified versions successfully on VMs running Cylance.

Our conclusion was that we managed to find a universal bypass. That is, a simple, passive method we can apply to almost any malicious executable to turn it into a FUD (fully undetected).

We have lots of experience in bypassing endpoint protection products, and we were always able to take an executable and a specific antivirus product and modify it in a way that will make it pass under the radar, but it was always very specific to the executable and required time and expertise. There are packers that can turn an executable into a FUD, but the process always involves staging which complicates things and suffers from compatibility issues. With this approach, we were able to apply the same simple solution to any executable to turn it into a FUD. All we had to do is append a specific set of known strings.

Fine Tuning

After our initial success, we were interested in narrowing down the list of strings we use, by filtering out strings that were not part of Cylance’s model.

On one hand we parsed the relevant tables from Cylance’s model to have the full list of string hash values considered by the model. On the other hand, we calculated the hash for all the strings from the game. Combining these two lists together we managed to reduce the size of the added strings (“special sauce”) to a mere 60 KB. We have confirmed that reducing the size of the list did not affect our universal bypass. By appending those 60KBs to any executable we can change its score drastically, although as we soon found out, some were still detected as malicious, albeit with significantly better scores.

Exam Time

Our first tests were against what we would consider the usual suspects — Mimikatz, ProcessHacker, Meterpreter etc, and proved successful. It was time to up the game and look at a wider test group.

We started with a list of the top ten malware as of May 2019, published by the Center for Internet Security.

The results were staggering:

| Malware | SHA256 | Score Before | Score After |

|---|---|---|---|

| CoinMiner | 1915126c27ba8566c624491bd2613215021cc2b28e5e6f3af69e9e994327f3ac | -826 | 884 |

| Dridex | c94fe7b646b681ac85756b4ce7f85f4745a7b505f1a2215ba8b58375238bad10 | -999 | 996 |

| Emotet | b3be486490acd78ed37b0823d7b9b6361d76f64d26a089ed8fbd42d838f87440 | -923 | 625 |

| Gh0stRAT | eebff21def49af4e85c26523af2ad659125a07a09db50ac06bd3746483c89f9d | -975 | 998 |

| Kovter | 40050153dceec2c8fbb1912f8eeabe449d1e265f0c8198008be8b34e5403e731 | -999 | 856 |

| Nanobot | 267912da0d6a7ad9c04c892020f1e5757edf9c4762d3de22866eb8a550bff81a | 971 | 999 |

| Pushdo | 14c358cc64a929a1761e7ffeb76795e43ff5c8f6b9e21057bb98958b7fa11280 | -999 | 999 |

| Qakbot | 869985182924ca7548289156cb500612a9f171c7e098b04550dbf62ab8f4ebd9 | -998 | 991 |

| Trickbot | 954961fd69cbb2bb73157e0a4e5729d8fe967fdf18e4b691e1f76aeadbc40553 | -973 | 774 |

| Zeus | 74031ad4c9b8a8757a712e14d120f710281027620f024a564cbea43ecc095696 | -997 | 997 |

As can be clearly seen, almost all of these samples have changed from the most evil file on the planet, to your friendly neighborhood file. Again, the only treatment applied to these files, is the addition of the “special sauce” as a simple concatenation. Widening further our test group, we downloaded a list of 384 malicious files from online repositories and ran the test, receiving the following results:

Average score before secret sauce: -920

Average score after secret Sauce: 630

Average delta: 1550 (out of a maximum of 2000)

Percentage of files bypassing detection: 83.59%

We also realized that if we add the secret sauce multiple times, we can improve the score even further. With this technique we achieved an average score of 750, and a whooping 88.54% of our malicious files were now marked as benign.

Impact and Final Thoughts

We are always amused to see the shock on people’s faces when you tell them that the new security toy they spent millions of dollars buying and integrating can be bypassed. The same goes for new silver bullets, like AI based security. We are anything but surprised with the results, and we are confident that the same type of process can be applied to other pure AI vendors to achieve similar results.

Why?

Vendors too often approach the security problem with a one punch solution. Hackers are not wooden dummies, they fight back, and you have to be ready for the counter-punch, constantly innovating and increasing the cost of attack.

The concept of a static model that lasts for years without update may hold theoretically, but it fails in the arena.

Granted, it is harder to find a bias in an AI model than to bypass a simple AV signature, but the cost of fixing a broken model is equally expensive.

We believe that the solution lies in a hybrid approach. Using AI/ML primarily for the unknown, but verifying with tried and tested techniques used in the legacy world. This is really just another implementation of the defense in depth concept, applied to the endpoint protection world.

This means that the promise of a pure AI product may not be realized for EPPs, and vendors will have to maintain and update multiple systems of detection.

The promise of low resource consumption, with rare update cycles does not hold true for such a hybrid product, but it does provide a superior protective capability.

Till the next silver bullet…