All Your Cloud Are Belong To Us (CVE-2019-12491)

Serendipity

Many of the greatest discoveries in the world, science, continents and others are credited to chance. Antibiotics, corn flakes, and even the microwave were all discovered while looking for something else entirely.

So no, we did not make a discovery on the scale of corn-flakes, which disrupted millions of children (and cows) around the world, but we did make a startling discovery with the potential to impact hundreds of thousands of production servers and businesses around the world.

TL;DR

OnApp is a cloud orchestration platform used by thousands of public and private cloud providers. By gaining access to a single server managed by OnApp, e.g. by renting one, we were capable of compromising the entire private cloud. This has been made possible due to the way OnApp manages different servers in the cloud environment. OnApp exposes a feature that allows any user to trigger an SSH connection from OnApp to the managed server, with the SSH agent forwarding feature enabled. This allows an attacker to relay authentication to any other server within the same cloud, achieving RCE with root privileges. This method has been validated and replicated across multiple cloud vendors utilizing OnApp for XEN/KVM hypervisors.

You’ve got mail

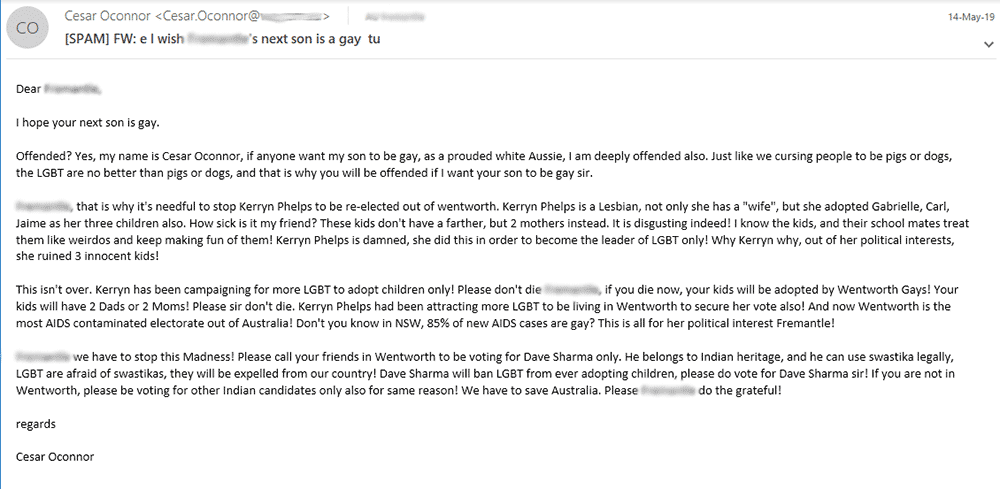

Right before the May federal elections in Australia, we were contacted by a member of the federal parliament in Australia, who was then running for office.

He was concerned, as an unknown individual or group had been targeting his campaign for a long period of time, sending thousands of emails with the most vile hate messages. From race to sexual orientation, everyone was a target in these emails, except for the candidate. They only had warm words for him and generally speaking, Indian heritage candidates.

The emails have been forged to appear as if they are coming from many different small businesses in Australia and forwarded, where in fact they originated from a single source.

This was a rather basic ruse to make it seem as if the candidate was connected to or condoning these messages, thereby damaging his campaign.

To our surprise, a lot of people were buying into it and constantly complaining to the campaign manager for being behind these emails.

The person or group behind this were extremely annoying and persistent as we will shortly describe, and as such, for the remainder of this piece we will dub them APT, for Annoying Persistent Turds.

Be the ball

From the numerous emails we were forwarded, we managed to identify several servers that were used over a significant period of time by the APT to send the hate filled emails.

Our APT was really insistent on using a certain hosting company, most likely because it didn’t require any payment or identification to set up a free 24-hour trial server. Here by the way, is a very important lesson for cloud providers - you should never provide free infrastructure that requires no identification, you’re literally asking for trouble.

While the hosting company was really responsive in taking down the APT’s server when contacted, the APT would just sign-up for a new one the next day, with a brand new GMail address (usually with some kind of Indian cultural reference).

Understanding that this cat and mouse game is becoming tiring and not yielding significant results, we decided to escalate our efforts.

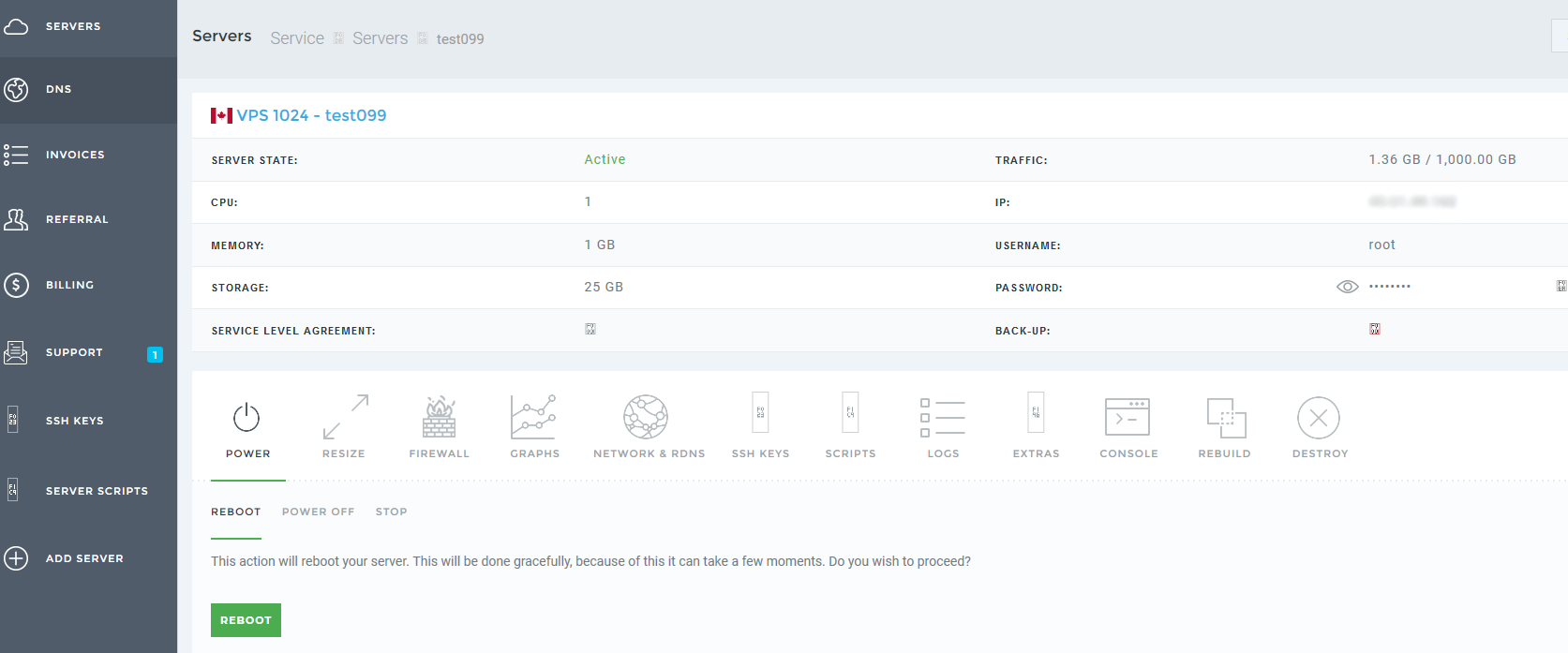

As a first step, we thought it would be a good idea to rent a free server ourselves, walk the path of the APT, understand the process they are going through, and maybe find some sort of issue or data we can use to our own advantage.

The process was pretty straightforward and indeed we saw that no details were required to set up a server except for an email address. Did we mention asking for trouble?

Our original goal was to see if the APT would likely leave some incriminating evidence during the registration process or server configuration, such that if authorities took hold of it, they could have something valuable.

With nothing of interest immediately visible, we started to hear the dark side calling us with its familiar voice.

Could we somehow find a vulnerability instead and just take control of their server?

We convinced ourselves that we will only poke around and check if it’s possible, but won’t actually go ahead with it.

Scout’s honour!

Orchestrated Chaos

The first and most prominent feature we had in front of us, was the control panel used by the hosting company.

Now there are many ways to learn how to use a new technology or product like this one. Some opt for a user manual, others go to Youtube and the most orderly ones seek a certification.

We, on the other hand, always believed that the best way to learn how something works is the “Drunken Monkey” technique - push every single button and see what happens.

You may break a few things along the way, but it’s a small price to pay for the gift of knowledge.

We applied the “Drunken Monkey” technique to the control panel, and pushed any and every button we could find.

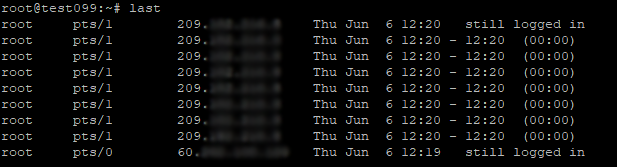

We then logged in to our server and executed different commands looking at processes, services and sessions that may be of interest. We quickly realized that someone, or something has logged into our server.

Hmmm…. hello there stranger… Who are you and why are you SSH-ing to my server?

Further testing showed that a button called “Add Local Network” on the control panel was responsible for triggering this connection.

Could this be the way this server is managed and configured by the control panel?

A very likely candidate in that case would be SSH, and if that is the case, there’s a good chance that we can find some evidence to back that up.

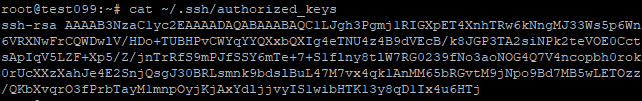

A quick look at the authorized_keys file showed that indeed someone had pre-installed a public key to be able to access our server.

Reality Check

One of the most effective skills in offensive cyber security is the ability to understand human nature and its many weaknesses. Social engineering is a well known implementation of that skill, but the ability to get into the head of designers, developers and architects is just as important.

It boils down to asking yourself “How would most people design a certain component given the technology, KPIs and constraints of the real world?”.

So in the context of this feature we asked ourselves - “Would it make sense to provide a different key pair per server? Probably not!”. More likely, the management software is using the same key pair to manage every server hosted by this company.

But even if they use the same key pair for all their servers we don’t have the private key, so it doesn’t help us just yet, we would need something else.

Time to roll our hoodies, dig deeper and see how it actually manages our server.

I’m root

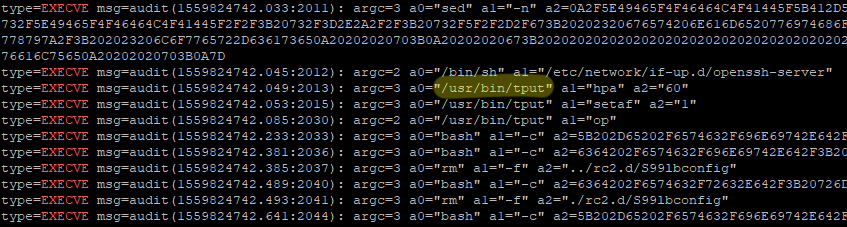

To understand what the management software might be doing on our server we used the built-in utility auditctl. Without a clear direction, we were looking at any and every executable running on our server.

auditctl -a exit,always -F arch=b64 -S execveSure enough, a list of executables emerged from the logs, and we were definitely not the ones running them. These were probably all the different management actions being conducted by the managing component, and we were looking for a good candidate to replace with our own code.

That is, if we know that the management software is executing a certain file, and having root privileges on our own server, we can change the contents of the file to force the management software to run our code instead.

On top of that, we can use the “Add Local Network” button to trigger this code execution whenever we want.

Lastly, we have an assumption, which is that the same key pair is used across all servers in this hosting company.

These three together equate to drum roll… absolutely nothing! That is, unless we can somehow abuse the trust that the management software has in our server.

One Step Forward

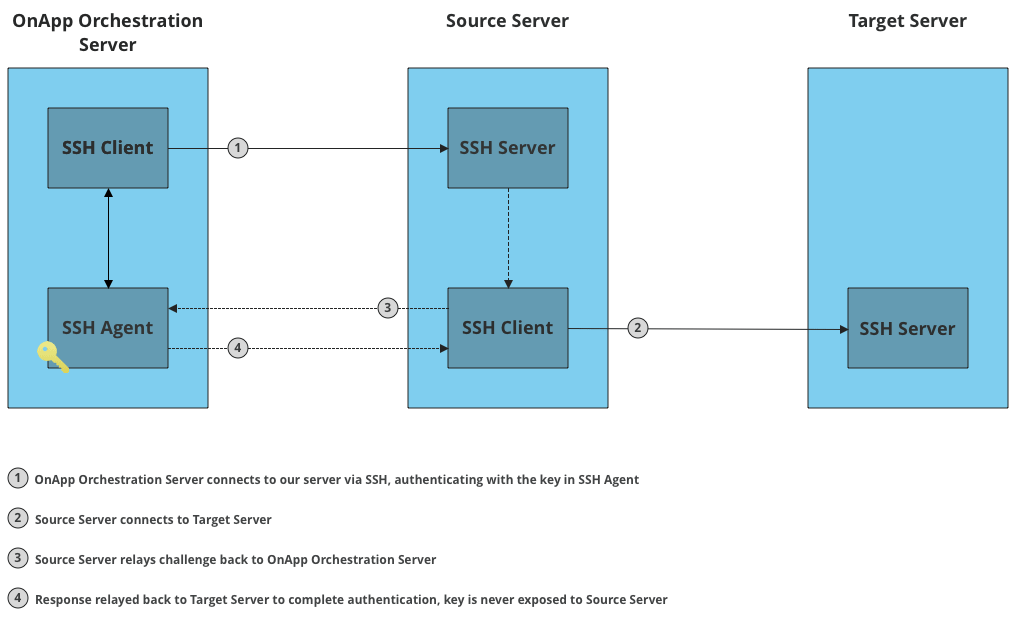

One of the useful features of SSH is called agent forwarding.

SSH agent forwarding allows you to connect to a remote machine (using SSH) and then provide that remote machine with the magical ability to SSH to other machines without ever possessing your private key or the passphrase that protects it.

The benefit of using this feature is that instead of leaving your private key on multiple servers (from which you connect to other servers), you keep it locally, and provide the remote server with the means to use your private key without exposing it.

Technically this works with the local machine answering key challenges and relaying them through the remote server to target servers.

This is also an extremely dangerous feature, and documented as such.

With this feature enabled, a remote server accepting your SSH connection can now authenticate to any server that accepts your credentials.

If the hosting provider configured their SSH connection with agent forwarding, that would mean that we have a full chain, plus trigger, enabling us to compromise every single server on this hosting company with root privileges.

But that is too good (bad) to be true, right?

Scent of Blood

The most critical missing piece in the puzzle so far is the configuration of agent forwarding. If we can prove that point, we are only one assumption away from victory, or total failure and a waste of a perfectly good morning.

Ideally, we would validate this using two different servers - one that receives the incoming connection from the management component, and another, to which we will forward the connection.

However, laziness is the mother of all inventions, therefore we decided that we will first try this with a single server that connects to itself via agent forwarding. It’s crude, but it would still prove the point that agent forwarding is enabled.

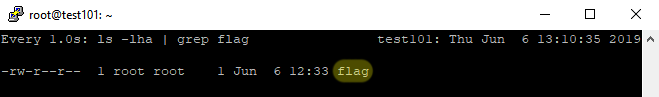

All we have to do is replace one of the binaries that the management component executes (e.g. tput) with a script of our choice that attempts to use the agent forwarding feature and write a flag file to disk. The script we will use to replace the tput binary will be something along these lines:

/usr/bin/ssh -o "StrictHostKeyChecking no" -A -E ~/log.txt -n -v 127.0.0.1 /bin/bash -c "echo 1 > /root/flag"

All is in place, now we have to push the trigger, and wait for a result.

Authentication succeeded! The unmistakable scent of blood started creeping into our nostrils.

At this point, we were so excited that we did the unthinkable and postponed lunch indefinitely. That Pad See Ew will have to wait.

All Your Cloud

Just to recap, at this point we have proven that:

- We can trigger the management software to SSH to our server and run commands.

- We can switch the code it was meant to execute with arbitrary code by replacing one of the binaries it commonly executes.

- The management software does in fact use the SSH agent forwarding feature.

If we manage to prove that it uses the same key pair for all of the servers in the current hosting company it literally means that we pwned all of their servers.

With much excitement in the air, and our office space neighbours now visibly annoyed at our increasingly loud ramblings, we set off to the final test.

We will do the following:

- Set up a source server, which is the one an attacker could have by signing up for a trial, or renting a server.

- Set up a target server, which is well, a target.

- Overwrite tput on the source server with a script of our own that utilizes SSH forwarding to connect to the target server and drop a flag file.

- Trigger the management software.

- Chew on our fingers anxiously waiting for the flag file to appear on the target server.

- Go get that Pad See Ew!

Do you know how things never work on the first try, especially when Murphy’s law is involved?

So apparently Murphy was too hungry to stay and ruin our experiment, and this is one of the rare few times that we can remember that something like this worked on the first time - target server showed a beautiful root owned flag file, right where we put it.

If you are more of a video person, here’s the full exploitation flow:

Impact

After celebrating a very late lunch, we set about to further investigate the potential impact of this vulnerability.

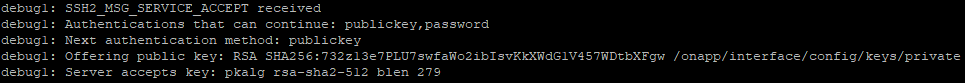

The log file of our SSH client revealed a few more details about the management software. The path of the key used for authentication, with a bit of help from Google, pointed to a company called OnApp.

Apparently, this was not a local feature used by this one hosting company, rather a cloud orchestration product used by many different hosting companies and private cloud providers around the world.

If we could replicate this across other companies then the impact is much greater and more dangerous.

All we have to do is find cloud providers using OnApp, rent a couple of servers, and test our thesis again. After two more successful attempts we decided to stop and declare victory (or defeat for security). This was not limited to one specific hosting company, rather had the potential to impact many different companies using OnApp for orchestration.

Understanding exactly which companies were affected was a bit beyond the scope of our already off the rails investigation, but as of 2017 OnApp claimed 3,500 customers, each running their own private and public clouds, each having thousands of customers of their own.

The impact was quite clear and significant - this had the potential to compromise 100,000s of servers and businesses. Ouch.

Responsible Adults

Being the responsible adults that we are, we initiated a responsible disclosure process, contacting both the local CERT and the vendor.

Hats off to OnApp that were extremely quick to respond, acknowledge the issue, and provide us with a timeline for fixing the issue.

I wish I had warm words for the CERT as well, but we literally did not get a response, not even after we called a few times and let them know that we have sent them a quite important email.

The vulnerability has been assigned the ID CVE-2019-12491.

OnApp advisory is also available here.

And what about our APT?

We provided the Australian Federal Police with all the information we gathered through our investigation, with enough threads to pull to get to the APT’s real identity.

Yes, we had the technical capability to take control of the APT server, but we didn’t, even though the little voices in our head said otherwise, repeatedly.

The legal reality is that “hack back” laws are unclear and even if you want to stop an ongoing attack or crime that is being committed, you can’t do so legally in Australia, as far as we understand. Maybe it’s different in Texas.